Measuring Behaviors of Peromyscus Mice from Remotely Recorded Thermal Video Using a Blob Tracking Algorithm

David SchuchartSebastian Pauli (Department of Mathematics and Statistics, UNCG)

Shan Suthaharan (Department of Computer Science, UNCG)

Matina Kalcounis-Rueppell (Department of Biology, UNCG)

March 2011

The results on this page were first presented at the 2nd Annual NIMBioS conference and The 6th Annual University of North Carolina Greensboro Regional Mathematics and Statistics Conference.Abstract

We are interested in the behavioral ecology of reproduction for mammals in their natural environment. The model mammals that we use in our research are free-living mice (several Peromyscus species). We are specifically interested in patterns of mating, behaviors associated with mating, patterns of energy use, and acoustic communication. Because Peromyscus are nocturnal and elusive, we use a suite of direct and remote approaches to measure behavioral variables in the field. Dr. Matina Kalcounis-Rueppell in her field work collects nights of infrared video of mice. Animals are outfitted with radio transmitters to allow identification of individuals. Microphones, placed in the observation area, capture ultrasound recordings of animal vocalizations. Thousands of hours of video, audio, and identity data together contain a wealth of information, but this data needs to be processed and compiled to make conclusive observations about behavior patterns. Because of the amount of data, this would be a difficult task without the aid of computers. We are using computer vision techniques, such as blob tracking, to process video data. This allows us to automatically track the movement of the animals, and enables us, for example, to measure the speed of free-living individuals for the first time.Introduction

Measuring behaviors of free-living, wild animals is is difficult because the presence of an observer can impact the behaviors being measured. Additionally, measuring behavior of nocturnal animals is difficult because traditional methods of recording behaviors such as filming or observing from behind a blind are not possible in the dark. One solution that mitigates both of these difficulties is to use remote thermal (IR) video to record behaviors of free-living animals. Remote thermal video recording eliminates observer bias and allows for the study of animals in the dark. However, remote thermal video recording introduces a new problem in that it can generate massive amounts of image data, especially if recording is done continuously in real time that would take a significant time investment to process by hand. We show that using modern video and signal processing techniques, it is possible to measure behaviors directly from video data in an automated way. The species we used in our analysis were two species of Peromyscus mice, P. californicus and P. boylii. Over 131 nights in 2008 and 2009, continuous thermal video of free-living wild mice was recorded as part of a study to examine the behavioral context of ultrasound production by these two species. We analyzed terabytes video to quantify behaviors associated with locomotion. We used a C++ library OpenCV to isolate the mice from the background of the video. Once the mice were isolated, we used a blob-tracking algorithm, which recognizes “blobs” of similar pixels as foreground, to write the location of the mice to a data file. Our study demonstrates that is possible to remotely record and measure behaviors from free-living mammals using modern data processing techniques.Data Collection

Continuous thermal video of free-living wild mice was recorded in 131 nights in 2008 and 2009, as part of a study to examine the behavioral context of ultrasound production by Peromyscus californicus and Peromyscus boylii. .

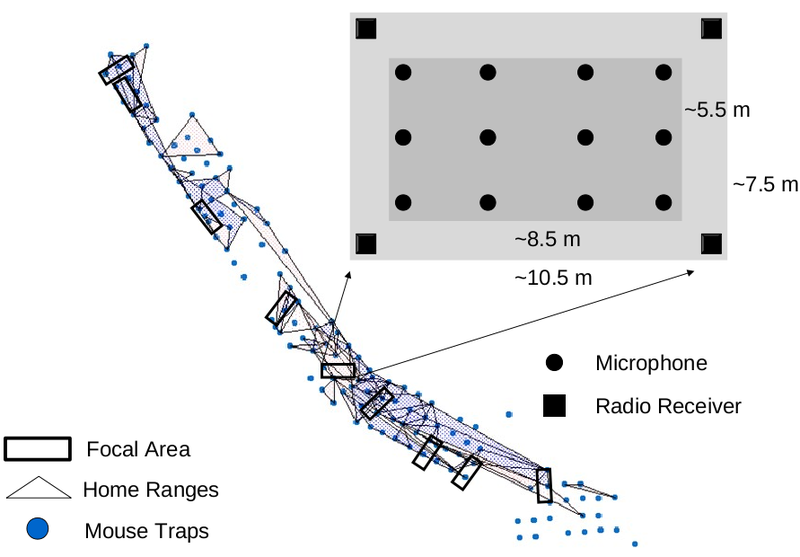

Figure 1: The focal areas for the infrared video recordings.

Figure 2: A focal area by day.

Figure 3: A still from an infrared video recording. The white blob in the lower center is a mouse.

Mouse Tracking

We want to track the movement of the mice in infrared video recordings. This is done in several steps- Background subtraction

- Image clean up

- Blob detection and labeling

- Blob tracking

Background Subtraction

As we were observing mice in the wild it is not so easy to isolate them from the complex background of vegetation. Simple background subtraction methods such as thresholding do not work, as the subject is too similar in color to the background. This problem can be overcome by computing an average image of all frames of the video and the subtracting this image from all frames. Unfortunately the background of our videos is not static, which complicates the situation, as we also need to filter out sudden changes such as leaves blowing in the wind. One approach consist of using a Gaussian to weight the frames of the picture with the frame under consideration being the center of the Gaussian. These method work fairly well, but usually quite a bit of distortion remains. We found that the algorithm by Liyuan Li, Weimin Huang, Irene Y.H. Gu, and Qi Tian [6] was suited very well for our problem. It uses different learning strategies for the gradual and sudden background changes are proposed to adapt to various changes in the background throughout the video.Image Clean Up

Commonly image morphpology (a sequence of dilations and erosions) is used to clean up the result of the background subtraction. Because the mice, that is the blobs, we are tracking are quite small this quite often removes the blobs we are interested in. Nevertheless distortions usually consist of clusters of only a few pixels that are smaller than a mouse, so that we can still effectively eliminate them. We use a median filter to clean up these small distortions. In a gray scale image an n x n media filter (where n is an odd integer) replaces the pixel in the center of a square of n x n pixels by a pixel whose brightness is the median of the brightnesses all the pixels in the square. In our black and white image the n x n median filter colors the center pixel of the n x n square if the majority of the pixels in the square are black and colors it white otherwise. For our purposes a 5 x 5 median filter appeared to be most useful. A mouse might be split into two blobs, for instance when it passes under a twig. Such two blobs would only be a few pixels appart. We merge such two blobs into one blob by using dilation. In the dilation step the center pixel of every 3x3 pixel square is set to white if there is at least one white pixel in the square, otherwise it remains black. Although the dilations without erosions increase the size of the blobs this does not affect the position of their centroid (the center of the bounding boxes) more than is acceptable for our purpose. At this point, the video should be clean enough for tracking.Blob Tracking

Tracking works by identifying blobs of similarly colored pixel in a frame and analyzing how they move from one frame to the next. Blobs are arbitrarily labeled so we know what blob we are tracking from one frame to the next. Some parameters that are set here include maximum distance a blob can travel to be considered the same track, and minimum and maximum size of a blob in order to be tracked. As each blob is tracked, its tracking information is written to a text file. The tracking information contains a list of all blobs found in each frame together with position and size information and an identifier for each blob, which allows it to be identified over consecutive frames. Sometimes there are blobs that do not belong to any mouse or other animal tracks, such as disturbances generated by gusts of wind that have not been eliminated by the background subtraction and image clean up. They result in very short tracks that can be eliminated on these grounds.Post Processing

In the post processing step we transform the tracking information which is given by frame into a list of tracks. We use the size information included in the tracking observation to distinguish mice and other animals (in our case mostly rats). We also clean up the track data a bit further. Furthermore we combine the track information with data from other sources than the video. In our case we added environmental data such as weather information and additional data from other parts of the observation setup which contained information about the presence of tagged mice and mouse calls obtained from audio recordings and from human observations. We also computed the dimensions of the area recorded in the video, which allows us to compute for example the travelling speed of mice in the post processing step.Implementation

The video processing was implemented in C++ using the C++ libraries OpenCV and cvBlob. Both libraries are freely available and are released under a BSD licence and the LGPL respectively. OpenCV provides implementations of the algorithms needed for the background subtraction and the image clean up steps. cvBlob offers the functionality needed for the blob tracking step. For installation instructions see the web sites given in the references. For an introduction to OpenCV see Learning OpenCV: Computer Vision with the OpenCV Library by Gary Bradski and Adrian Kaehler, O’Reilly 2008. The functions for the post processing were written in the programming language Python. Our blob tracking program outputs the tracking information in the form of a Python dictionary, which than can be easily imported into Python and processed The programs for video processing and post processing were called from a shell script, so that several hundred videos could be processed in one batch. All software used is available freely for MS Windows, Linux/Unix, and Mac OS X, see the references for the web site form which it can be obtained. Python is distributed most Linux distributions and Mac OS X. We developed our implementations under Ubuntu Linux. In the following we give some details about the library functions called by our programs and about the parameters we used. For the background subtraction we use the algorithm described in Liyuan Li, Weimin Huang, Irene Y.H. Gu, and Qi Tian Foreground Object Detection from Videos Containing Complex Background, ACM MM2003 that is implemented in OpenCV. We had to reduce the minimal area (minArea) of a foreground object to 12, so that the mice would not be filtered out, otherwise we used the standard parameters. The library cvBlob contains an implementation of the block labelling algorithm from A linear-time component-labeling algorithm using contour tracing technique by Fu Chang, Chun-Jen Chen and Chi-Jen Lu (Computer Vision and Image Understanding, 2003) mplemented in the C++ library cvBlob by Cristóbal Carnero Liñán. We found that the simple blob tracking methods from cvBlob were sufficient in our case. In the blob tracking step we do not allow changes of position by more than 10 pixels form frame to frame, we abandon blobs that have vanished for more than 10 frames and mandate that tracks are active for at least 10 frames.

#include <cv.h>

#include <cvaux.h>

#include <highgui.h>

#include <cvblob.h>

using namespace std;

using namespace cvb;

int main(int argc, char** argv)

{

/* Declare variables for tracking */

CvTracks tracks;

double seconds, frameCount = 1;

/* If no argument, throw an error. */

if (argc < 2)

{

fprintf(stderr, "Please provide a video file.\n");

return -1;

}

/* Start capturing */

CvCapture* capture = cvCaptureFromFile(argv[1]);

if (!capture)

{

fprintf(stderr, "Could not open video file.\n");

return -1;

}

/* Get the FPS of the video */

double fps = cvGetCaptureProperty(capture, CV_CAP_PROP_FPS);

/* Capture 1 video frame for initialization */

IplImage* frame = cvQueryFrame(capture);

/* Get the width and height of the video */

CvSize size = cvSize((int) cvGetCaptureProperty(capture, CV_CAP_PROP_FRAME_WIDTH),

(int) cvGetCaptureProperty(capture, CV_CAP_PROP_FRAME_HEIGHT));

/* Parameters for background subtraction. */

CvFGDStatModelParams params;

params.Lc = CV_BGFG_FGD_LC;

params.N1c = CV_BGFG_FGD_N1C;

params.N2c = CV_BGFG_FGD_N2C;

params.Lcc = CV_BGFG_FGD_LCC;

params.N1cc = CV_BGFG_FGD_N1CC;

params.N2cc = CV_BGFG_FGD_N2CC;

params.delta = CV_BGFG_FGD_DELTA;

params.alpha1 = CV_BGFG_FGD_ALPHA_1;

params.alpha2 = CV_BGFG_FGD_ALPHA_2;

params.alpha3 = CV_BGFG_FGD_ALPHA_3;

params.T = CV_BGFG_FGD_T;

params.minArea = 4.f; //CV_BGFG_FGD_MINAREA;

params.is_obj_without_holes = 1;

params.perform_morphing = 0;

/* Create CvBGStatModel for background subtraction */

CvBGStatModel* bgModel = cvCreateFGDStatModel(frame ,¶ms);

/* Temporary gray scale images of the same size as the video frames */

IplImage* temp = cvCreateImage(size, IPL_DEPTH_8U, 1);

IplImage *labelImg = cvCreateImage(size, IPL_DEPTH_LABEL, 1);

/* the tracks are represented as list of dictionaries in Python syntax */

/* start the list */

cout << "[" << endl;

}

/* loop through all frames of the video */

while (frame = cvQueryFrame(capture))

{

/* Update background model */

cvUpdateBGStatModel(frame, bgModel);

/* Apply the median filter to eliminate blobs too small to be mice*/

cvSmooth(bgModel->foreground, temp, CV_MEDIAN, 5);

/* dilate the image to merge blobs that belong to the same mouse */

cvDilate(temp, temp); cvDilate(temp, temp);

/* Show the mouse blobs */

seconds = frameCount/fps;

/* Detect and label blobs */

CvBlobs blobs;

unsigned int result = cvLabel(temp, labelImg, blobs);

/* Update the tracks with new blob information */

cvUpdateTracks(blobs, tracks, 100., 5);

/* output the track information */

if(tracks.size() > 0)

{

cout << "{'Time':" << seconds << ",'Frame Number':" << frameCount << "," << "'Tracks':[{";

for (CvTracks::const_iterator it=tracks.begin(); it!=tracks.end(); ++it)

{

cout << "'Track number':" << it->second->id << ",";

cout << "'Lifetime':" << it->second->lifetime << ",";

cout << "'Frames Active':" << it->second->active << ",";

cout << "'Frames Inactive':" << it->second->inactive << ",";

cout << "'Bounding Box Min X':" << it->second->minx << ",";

cout << "'Bounding Box Min Y':" << it->second->miny << ",";

cout << "'Bounding Box Max X':" << it->second->maxx << ",";

cout << "'Bounding Box Max Y':"<< it->second->maxy << ",";

cout << "'X':" << it->second->centroid.x << ",";

cout << "'Y':" << it->second->centroid.y;

if(it==tracks.end())

cout << "}";

}

cvRenderTracks(tracks, frame, frame, CV_TRACK_RENDER_ID|CV_TRACK_RENDER_BOUNDING_BOX);

cout << "}," << endl;

}

/* Release current blob information */

cvReleaseBlobs(blobs);

/* update the frame counter */

frameCount++;

cvWaitKey(2);

/* close the list of tracks */

cout << "]" << endl << endl;

/* after we're all done, clean up */

cvReleaseImage(&tmp);

cvReleaseImage(&labelImg);

cvReleaseBGStatModel(&bgModel);

cvReleaseCapture(&capture);

cvReleaseTracks(tracks);

return 0;

}

mouse-tracking.cpp: The source code for background subtraction and blob tracking. The tracking information is output in the format of a Python dictionary which than can be easily processed with Python scripts.

Acknowledgements

This project was supported by National Science Foundation (Grant IOB-0641530 and theNSF Math-Bio Undergraduate Fellowship at UNCG). We would like to thank the Office of Undergraduate Research of UNCG and in particular it's director Mary Crowe. Thanks also go to Radmila Petric, Jessica Briggs, Kitty Carney and all the students who worked in the field collecting data. Furthermore we would like to thank the Hastings Natural History Reserve for all of their support of our field work.